Machine learning materials physics

A combination of machine learning methods competes with first-order dynamics in the search for equilibrium states

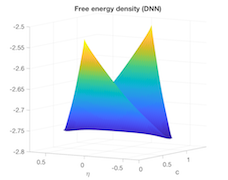

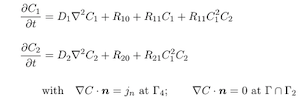

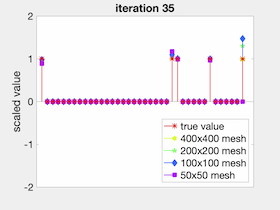

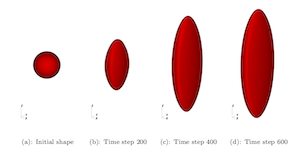

A cornerstone of computational models for phase-transformig materials is the class of first-order dynamics equations called phase-field methods, including the widely used Cahn-Hilliard and Allen-Cahn models. Phase-field methods may be regarded as imposing first-order dynamics to traverse a free energy landscape in search of equilibrium configurations, and lead to fascinating evolving patterns, which we, and others, have studied. However, if we are interested only in the equilibrium states, there may be alternative approaches to the phase-field dynamics, which could get trapped in very slow regimes, or have to struggle through stiff responses of the numerical solution. Instead, we have forged a combination of machine learning techniques including surrogate optimization, multi-fidelity modelling and sensitivity analysis as an alternative to phase field dynamics to compute precipitate morpholgies in a phase separating alloy. As we show, not only can machine learning approaches prove successful at finding the corresponding minima, but the resulting workflows naturally leverage heterogeneous computing architectures, which deliver efficiencies in terms of raw FLOP counts.

- Papers:

- Machine learning materials physics: Surrogate optimization and multi-fidelity algorithms predict precipitate morphology in an alternative to phase field dynamics

G. Teichert, K. Garikipati

Computer Methods in Applied Mechanics and Engineering

October 2018, doi.org/10.1016/j.cma.2018.10.025

[available on arXiv]

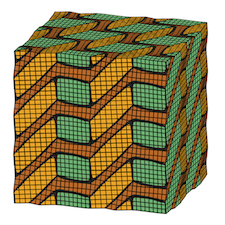

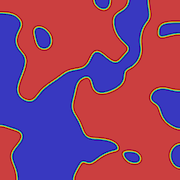

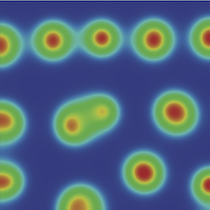

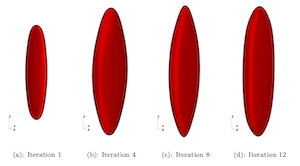

Shown here are comparisons of precipitate morphologies computed with the machine learning methods (left) and phase-field dynamics (right)

[Click images to enlarge